Multi-LLM Support

In today's rapidly evolving AI landscape, having access to the right language model for your specific needs can make all the difference in productivity and results. FunBlocks AIFlow stands out by offering comprehensive support for multiple leading LLM models, giving you unprecedented flexibility and power in your workflows.

Official Support for Top-Tier LLM Models

FunBlocks AI seamlessly integrates with all leading large language models, including OpenAI's GPT-4o, Anthropic's Claude, Google's Gemini, DeepSeek, and other state-of-the-art AI models. Additionally, FunBlocks AI will continuously update to the latest and most powerful versions available.

Why Access to Multiple Models Matters

Having multiple AI models at your fingertips isn't just a luxury—it's a strategic advantage:

-

Leverage specialized capabilities: Each model excels in different areas. GPT-4o might handle certain creative tasks brilliantly, while Claude might offer superior reasoning for analytical problems.

-

Match models to specific requirements: Choose the perfect AI partner based on your unique task requirements rather than forcing all your diverse needs through a single model.

Key Benefits of FunBlocks' Multi-Model Approach

-

Complete freedom of choice: Select the ideal model for each specific workflow or task.

-

Contextual continuity: Switch between models without losing your task context or having to repeatedly copy-paste prompts between different chatbot interfaces, dramatically improving efficiency.

-

Cost-effective access: No need for multiple subscriptions to ChatGPT, Claude, Gemini, and other AI applications. With a FunBlocks AI Plan, you gain unlimited access to all premium AI models in one place.

Using Your Own LLM API Keys

Already have API keys for OpenAI, Gemini, Claude, DeepSeek, or any OpenAI-compatible API? FunBlocks AIFlow lets you use your private API keys, allowing you to access all of FunBlocks' AI capabilities without additional subscription costs.

How to Set Up Your Private LLM API Keys

Setting up your own API keys in FunBlocks AIFlow is straightforward:

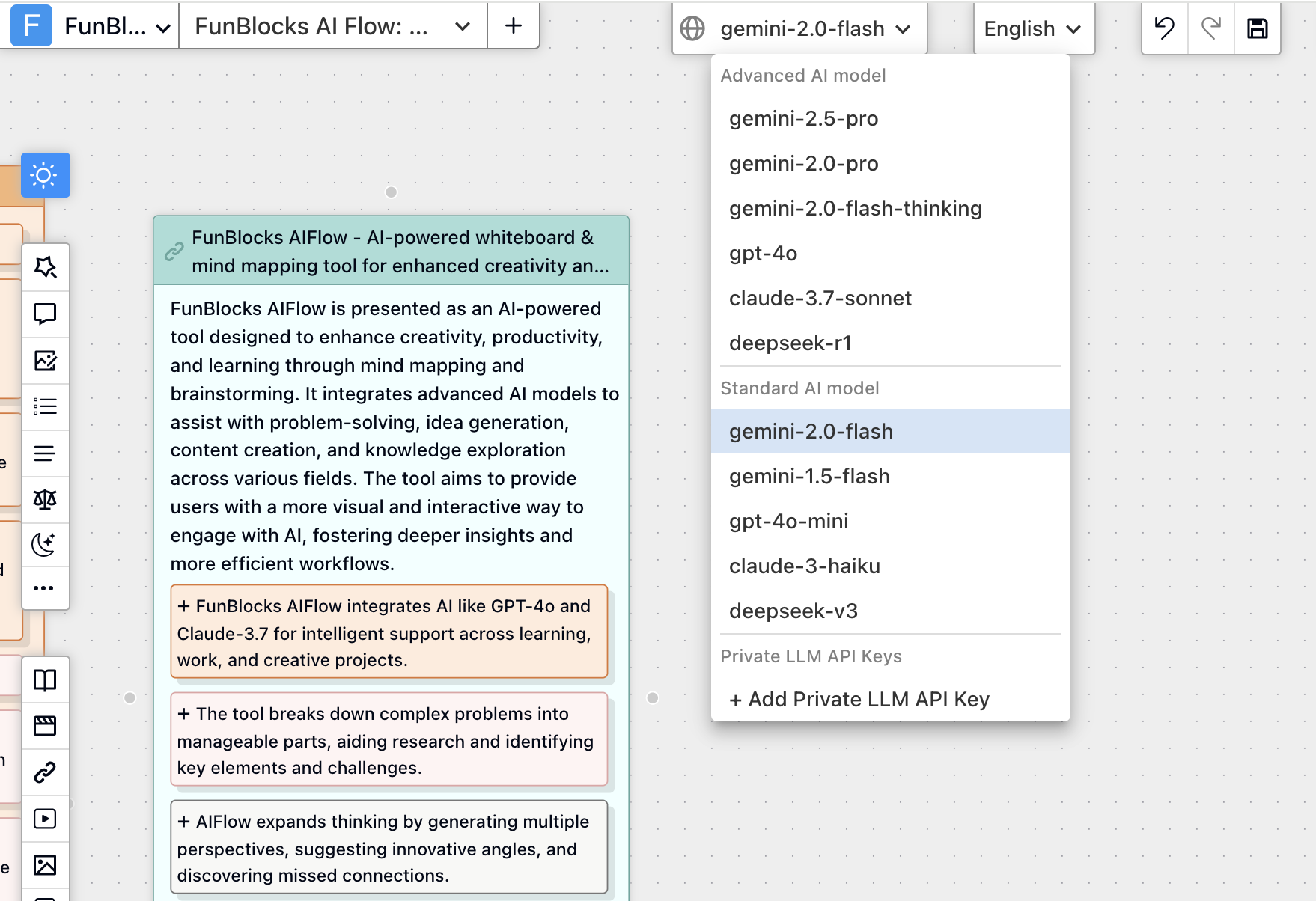

Step 1: Access the Model Selector

From the FunBlocks AIFlow editor interface, locate the model dropdown menu in the top-right corner (showing something like gemini-2.0-flash) and select "+ Add Private LLM API Key".

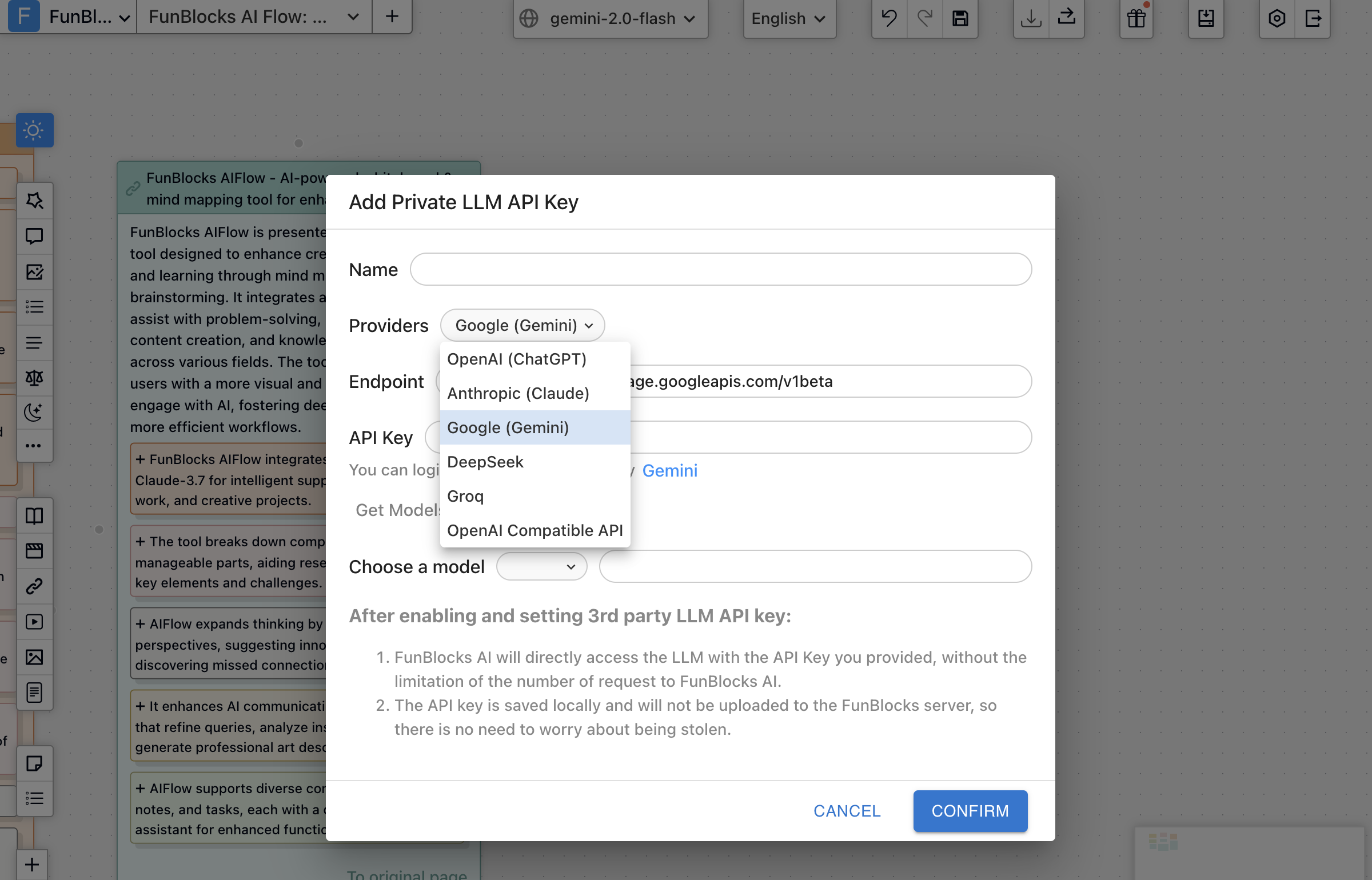

Step 2: Enter Your API Key Information

In the popup window, complete the following fields:

-

Name: Create a recognizable label for this model (e.g., "My GPT-4 API").

-

Provider: Select your model's service provider:

- OpenAI (ChatGPT)

- Anthropic (Claude)

- Google (Gemini)

- DeepSeek

- Groq

- OpenAI Compatible API (for self-hosted models with compatible interfaces)

-

Endpoint: The default address typically populates automatically. Keep this unless you're using a custom service.

-

API Key: Paste the API key from your provider.

Step 3: Select Your Model

Click the "Choose a model" dropdown menu. FunBlocks will automatically fetch available model versions from your API key (such as gpt-4o, claude-3-opus, or gemini-1.5-pro). Select your preferred version. You can also input modelId if not shown in the dropdown menu.

Step 4: Confirm

Click the "CONFIRM" button in the lower right to save and activate your private model.

Benefits After Setup

Once configured, you can:

- Seamlessly call your private models within the AIFlow whiteboard

- Combine FunBlocks' visualization tools with your preferred AI models for brainstorming, mind mapping, and note-taking

- Access all AI features without FunBlocks plan restrictions while using your own API keys

Making the Most of Multi-Model Support

To maximize the benefits of FunBlocks' multi-model capabilities:

-

Experiment with different models for similar tasks to identify which performs best for your specific needs

-

Use specialized models for specialized tasks – for instance, creative writing with one model and data analysis with another

-

Compare outputs from different models side-by-side to gain deeper insights

By thoughtfully selecting the right model for each context within FunBlocks AIFlow, you'll experience significant improvements in both efficiency and output quality, all within a single, integrated environment.